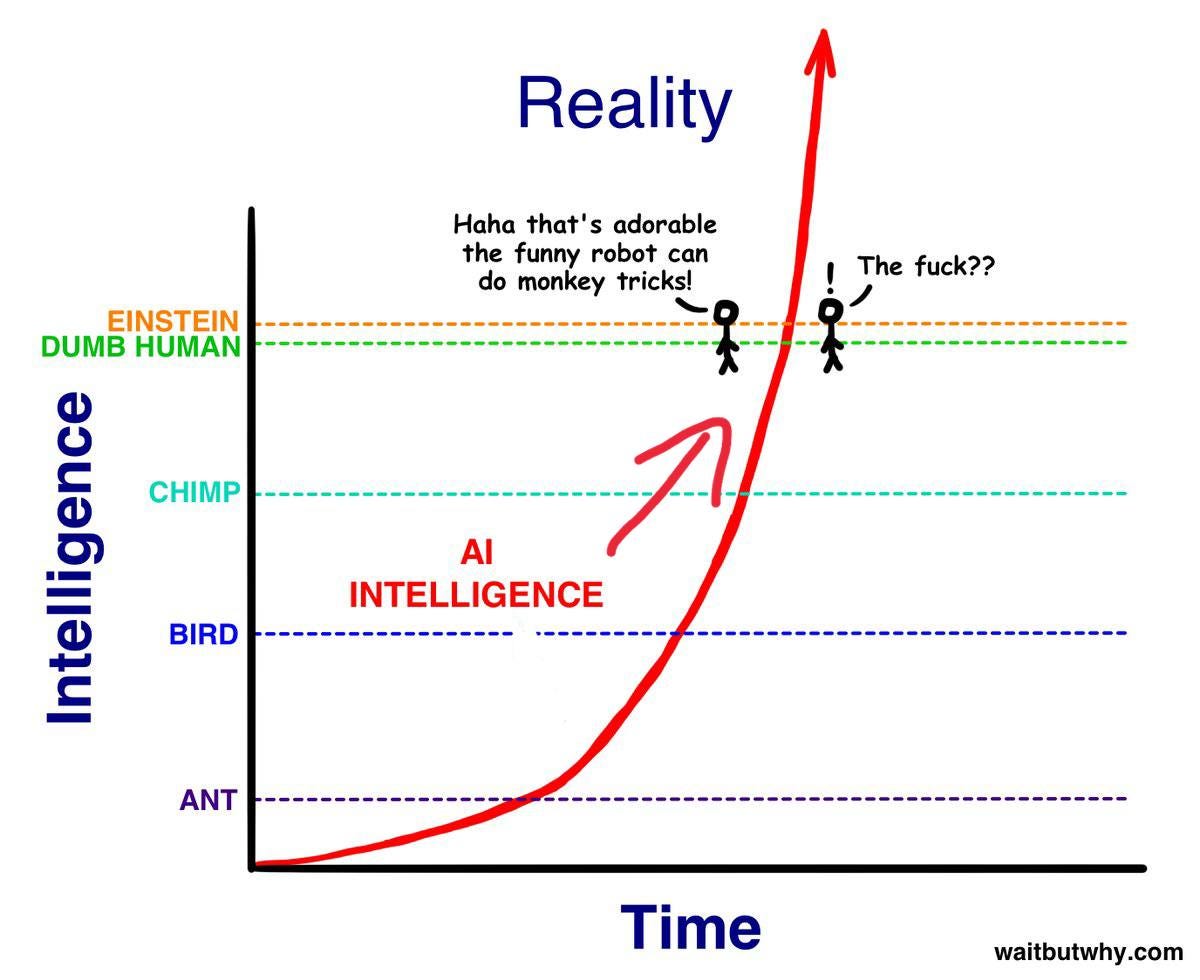

I am not being hyperbolic. I truly believe that we are at the precipice of a world-changing paradigm being ushered in by the advent of accessible AI.

I also believe that virtually everyone on earth is either unaware, or is underestimating the impact this is going to have on our lives.

The closest thing I can think to relate this to is the impact that the internet had on humanity.

AI is going to be even more disruptive.

My goal in writing this Letter is to open people’s eyes. To help you (and to help myself, through researching) better understand what is happening, and to attempt to understand what is likely to happen.

To help prepare ourselves for an impossible-to-prepare-for future.

Our perception of reality is going to forever shift.

We’re going to bring people back from the dead.

Almost every currently conceivable job will be doable by AI in the future (note that I said will be doable, not will be done).

There’s a lot to be afraid of. There’s also a lot to be excited by.

Let’s dig in deeper.

What is AI?

Always good to start with definitions.

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs (https://en.wikipedia.org/wiki/Artificial_intelligence)

Pretty abstract and theoretical and open-ended. That’s by design though. AI is, effectively, getting a machine to do something. It used to be super basic. A calculator, that’s AI. Arguable an abacus is AI too — a tool used to perform complex calculations.

No longer do we need to do 42069 x 33333 by hand (god knows why anyone would have needed to do that anyway), but now we can just open up a calculator app and bingo bango bongo we know the answer is: 1,402,285,977.

Actually we no longer even need to open up a calculator app. We can just go to Google and type it in, or better yet, hey Siri — what’s four hundred twenty thousand and sixty nine multiplied by thirty three thousand three hundred thirty three?

AI is improving.

It is far beyond the scope of this post to dig into the technical nuances of how AI works. I am not an expert on that, and it would probably fly above the heads of most of my readers (..no offense?) — it goes well over my head too.

I effectively just take it for granted that super smart people have figured out super smart stuff and now we’re seeing all sorts of insane practical applications, and it is those that I want to talk more about.

Just like I don’t really know how my car, the internet, or theoretical physics work — I still know how to drive from A to B, play video games with friends online, and that if I drop an apple gravity will take it to the ground.

Why is everyone talking about AI now?

AI is not new. As we discussed above, it has been around in some form or another for a very long time. Even recently, AI has been a massive topic of research and application across many industries. Still, it has remained a somewhat niche area. Occasionally we’d hear about AI getting good enough to beat someone at Chess, or Go, or Poker, or another game.

Now, though, more and more people are talking about AI. This is because of the rapid pace with which consumer applications are hitting the market.

Most people reading this have probably heard about Grammarly, DALL-E, Midjourney, Stable Diffusion, etc. Most recently making head waves is ChatGPT.

There’s something all of the above programs have in common: they do what we used to think was impossible.

Most of us used to think computers were great because they did the boring data-heavy jobs, the “left-brain” stuff. Numbers, spreadsheets, databases, great, let’s get a computer to save us time. We don’t mind computers getting better and faster at this stuff; at least they’ll never be able to take over the creative side of things: creating art, writing poetry, etc.

Oh how wrong we were.

Most of us are ill prepared to live in a world where AI can do the creative side of things. Furthermore, we’re still at the pocket calculator stage for this stuff.

This is an image that won an art contest at the Colorado State Fair. It was created by MidJourney, an AI art engine that creates art based on text-based prompts from a user.

There’s a whole ethical conversation that I am going to sidestep here — but I might cover it in a follow-up post. I find it fascinating, but for the sake of brevity in what is already going to be a long letter, we’ll avoid this landmine temporarily.

Check out this thread for some more examples of art created by AI:

A few examples from it:

At ZenAcademy we recently launched a FREE 30 day beginner course for anyone wishing to learn about NFTs. You can sign up at 30daysofNFTs.com. This is a perfect resource to send to your friends and family members that are NFT-curious.

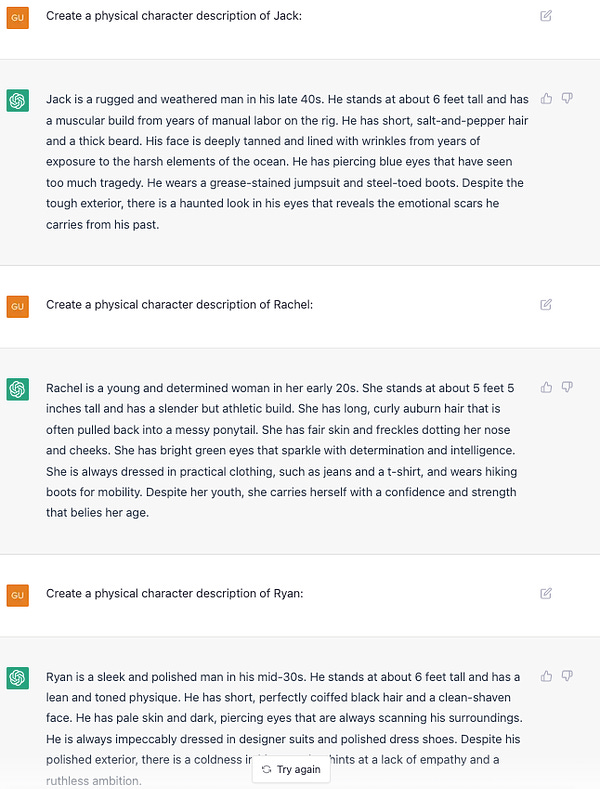

ChatGPT

This requires a section of it’s own. What is ChatGPT? Let’s ask it:

It’s basically a chat-bot that can do.. almost anything.

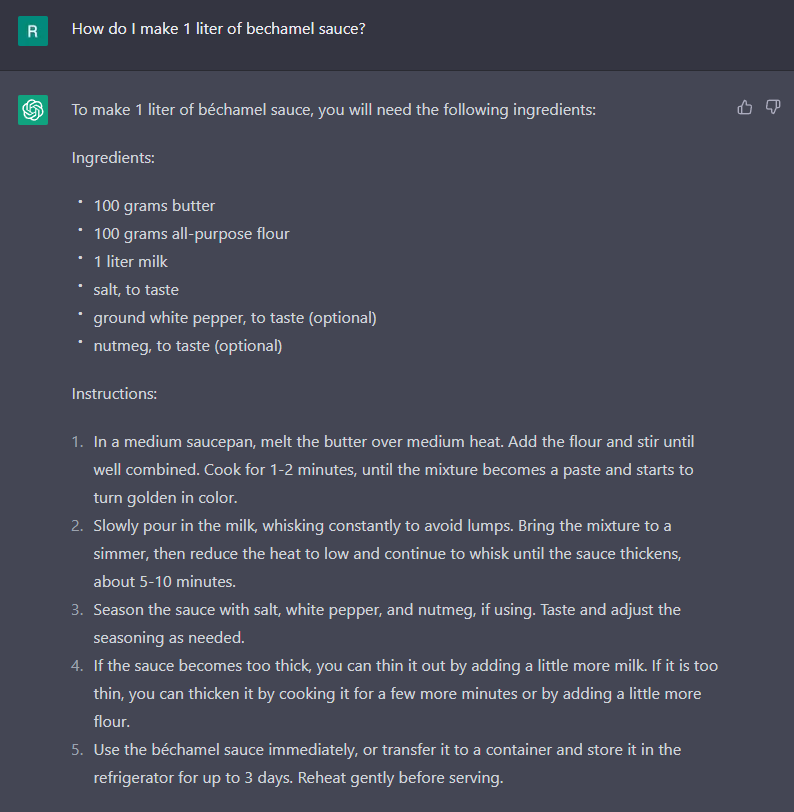

A lot of people are calling it the “Google killer”. In many ways, it is. Want a recipe? Just ask ChatGPT. You no longer have to read/scroll past 9 paragraphs of someone’s life story just to get to a recipe, littered with ads and multiple unit types. Just. Ask. ChatGPT:

Ah, but it assumes we have bechamel sauce! A human would probably have added a recipe for that! Okay.. 4 seconds later:

Cool. This is not even beginning to scratch the surface of what ChatGPT can do. Maybe it’s a Google Killer in some respects — about time they had some real competition anyway.

What if I told you it’s a coder killer, a copywriter killer, a lawyer killer? A game designer killer? Because it kinda is.

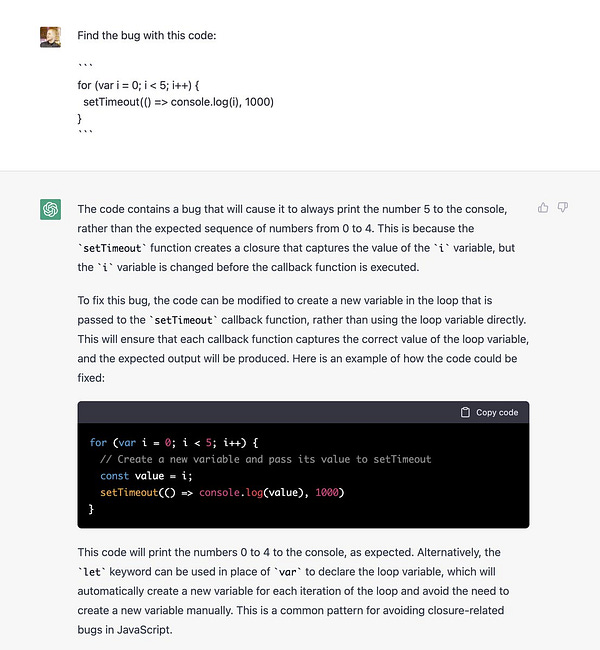

Here it is debugging code:

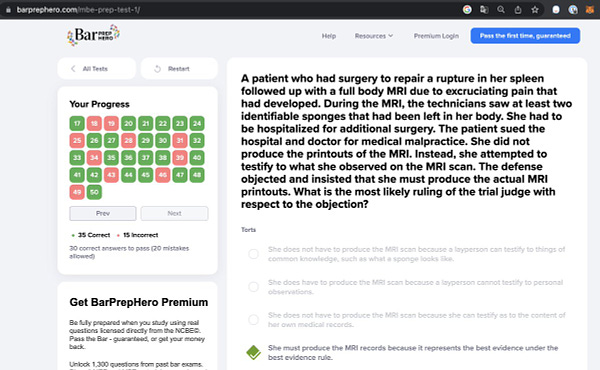

HERE IT IS PASSING THE FREAKING BAR EXAM:

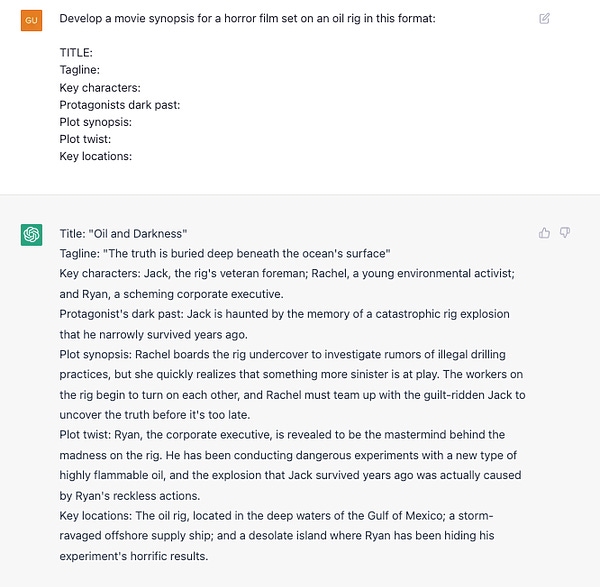

Here it is writing a movie, and then using MidJourney to create the characters / concept art:

This person created a Twitter bot without knowing any programming language.

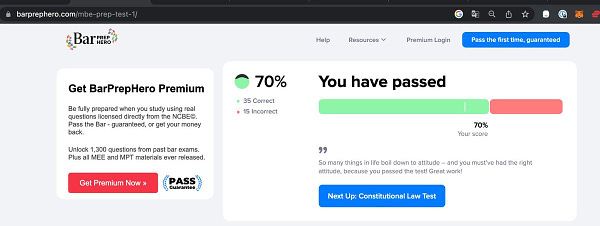

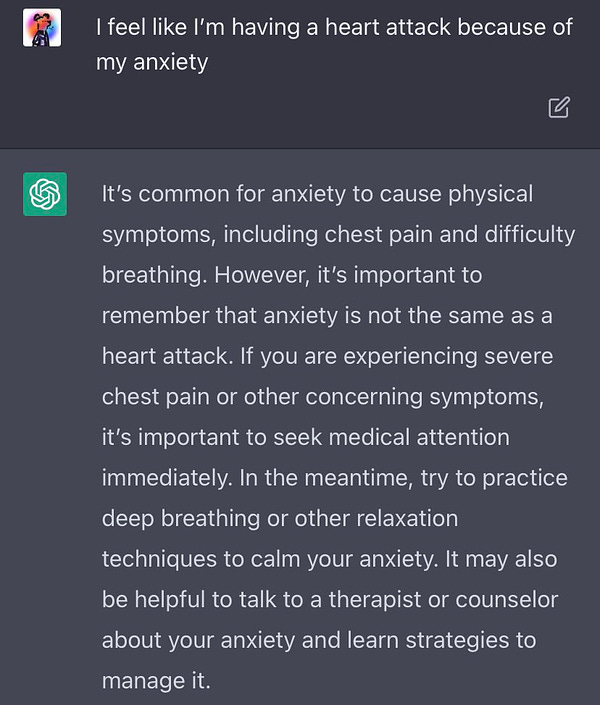

Here it is as a Mental Health chat replacement/alternative:

Have an essay to do for school? Why not ask ChatGPT to write it for you…

(I literally just asked it this now):

Like… I am still just scratching the surface here. The other thing to remember is: it is only going to get better over time.

You can ask it to write for you, to distill information for you, to code for you, to be creative for you, to reply to emails for you, to reply to emails as you, to reply to emails for you as someone else.

You no longer really need to know how to code to create something. You can just ask ChatGPT to write the code, to debug it, to tell you how to deploy it, to test it for you. Want to start a blog but suck at writing? Just ask ChatGPT to write in the style of your favourite writer(s). Maybe edit it a bit. You’ll probably end up with a blog better than 99% of what exists out there.

This sums it up:

I recommend everyone tries ChatGPT themselves. It’s (currently) free.

Bringing people back from the dead

Say what?

Of course we’re not actually bringing people back from the dead, but we’re effectively bringing people back from the dead. This begins a much larger conversation about our perception of reality, and I’ll get to that in a bit, for a bit, but first.. what the hell am I talking about?

Basically, the ability to upload content (text, audio, video) of a person and have it be processed by AI to then create an entity that, for all intents and purposes, talks, acts, and “thinks” exactly like the person.

Podcast.ai is a podcast entirely generated by AI. There are two episodes so far.

Lex Fridman interviewing Richard Feynman, and Joe Rogan interviewing Steve Jobs.

Two current interviewers interviewing people who are dead. In their own words:

Whether you're a machine learning enthusiast, just want to hear your favorite topics covered in a new way or even just want to listen to voices from the past brought back to life, this is the podcast for you.

So that’s something.. what about for the rest of us?

Re;memory is a service where you can have a virtual human created of a loved one, so you can have conversations with them.

I have been re-watching Black Mirror while researching this Newsletter, and it is astonishingly eerie. Specifically to this purpose, the episode Be Right Back:

"Be Right Back" is the first episode of the second series of British science fiction anthology series Black Mirror. It was written by series creator and showrunner Charlie Brooker, directed by Owen Harris, and first aired on Channel 4 on 11 February 2013.

The episode tells the story of Martha (Hayley Atwell), a young woman whose boyfriend Ash Starmer (Domhnall Gleeson) is killed in a car accident. As she mourns him, she discovers that technology now allows her to communicate with an artificial intelligence imitating Ash, and reluctantly decides to try it.

Humans have never been able to actually interact with dead people. Perhaps in our dreams, perhaps in our daydreams. We have been able to go within our minds and imagine what a conversation might have been like with someone that passed away. What they might have said, what we hoped they would say, what we wished we had said to them.

We will all soon have the ability, if we choose (and if there’s enough footage), to interact with those we have lost.

I am not sure any of us can properly prepare for this.

You know those magical portraits in Harry Potter where they talk to people as they walk by?

Is it so difficult to imagine having digital picture frames in our houses injected with AI so that we might be able to keep our loved ones around, forever?

Is this a good thing? How will our brains process this?

There is simply no way to plan or prepare for what the future holds for us.

What about when robotics technology also sufficiently advances to be able to create robots with our loved ones injected into them?

What if someone creates these without permission? Wait, who has to give permission in the first place? The descendants? Is this like organ donation? Will the family have a say?

Will people begin preparing content so they may live forever as a machine?

Was Ross right?

Might even have nailed the 2030 timeline too.

I could muse a lot more on this topic and the philosophical implications, but that’s (another) another topic for another day. If you want to read more, this is a thoughtful post by philosophy professor Eric Schwitzgebel: Speaking with the Living, Speaking with the Dead, and Maybe Not Caring Which Is Which.

The Entire History of You

Let’s look at another case of Black Mirror predicting the future.

"The Entire History of You" is the third and final episode of the first series of the British science fiction anthology television series Black Mirror… the episode premiered on Channel 4 on 18 December 2011.

The episode is set in a future where a "grain" technology records people's audiovisual senses, allowing a person to re-watch their memories. The lawyer Liam (Toby Kebbell) attends a dinner party with his wife Ffion (Jodie Whittaker), becoming suspicious after seeing her zealously interact with a friend of hers, Jonas (Tom Cullen). This leads him to scrutinise his memories and Ffion's claims about the nature of her relationship with Jonas.

The episode was designed to be set in 2050…

So we’re a few decades off, but the writing seems to be on the wall already.

Let me introduce you to Rewind: The Search Engine For Your Life.

With rewind, you can find anything you’ve seen, said, or heard.

Basically, you install it on your computer, and you can then search…everything. Conversations you’ve had (text or video/audio), apps you’ve used, things you’ve watched… everything. Watch this clip for a quick demo of it:

They say that their vision is to give people “perfect memory”.

Perfect memory… that seems amazing.

It also seems like something we are utterly unprepared for. Just like chatting to the dead.

Seems great and useful to remember something from a meeting and go back and rewind to find it. Seems scary to think that the government might ask you to rewind the last 72hrs of your life before you board a flight to see if you interacted with any suspicious people.

Technology is a tool. It can be used for good, or it can be used for evil.

"The greatest dangers to liberty lurk in insidious encroachment by men of zeal, well-meaning but without understanding." - Louis D. Brandeis

It’s not to say that this is gonna end poorly. But when creating a new technology, you must not think only about how you might use it or how you might wish for it to be used, but also about how it might be used by a person with less noble desires.

I’m not saying anything bad against the founders of rewind.ai. I’m sure they’re great people with nothing but the best of intentions, and I doubt they are the only people working on this anyway. It seems like inevitable technology.

Instead, a simple warning: we must protect our freedom, before we have no freedom left to protect.

How about them DeepFakes?

It should come as no surprise that we are rapidly approaching the point with which fake video content can be made that is initially indistinguishable from “real” video content.

Fake News is only going to get worse as time goes on. In a world where our attention spans are the size of peanuts and more and more people doom scroll 10s TikToks, Shorts, and Reels and take what we see as gospel… fake truths can spread faster than facts can be checked.

Many have been skeptical of the written word for a long time. Far fewer are skeptical of video & audio content.

I implore you to begin to question every piece of video content you see. The vast, vast, vast majority is currently “real” and genuine. There will be a point, probably in the not super distant future, where the vast, vast, vast majority is fake.

Remember: this technology is only going to get better.

What are the risks of AI?

I was getting tired and this Letter is already long, so I used ChatGPT to write this section.

There are several risks associated with the development and use of artificial intelligence (AI). Some of the potential risks and concerns include:

Bias in data: AI systems are only as good as the data they are trained on. If the data is biased, the AI system will also be biased, which can lead to unfair and biased decisions being made.

Lack of transparency: Some AI systems, particularly those that use deep learning algorithms, can be difficult to understand and interpret. This lack of transparency can make it difficult to determine how the AI system is making decisions, which can be a concern when the decisions have significant consequences.

Privacy concerns: The use of AI can raise privacy concerns, as it often involves the collection and processing of large amounts of personal data.

Job displacement: The use of AI in various industries could potentially lead to the displacement of human workers, as machines may be able to perform certain tasks more efficiently and accurately than humans.

Security risks: AI systems can be vulnerable to hacking and other forms of cyberattacks, which can have serious consequences if the system is being used in a critical application such as healthcare or transportation.

Misuse of AI: AI systems can be misused or abused if they are not designed and implemented responsibly. For example, AI systems could be used to spread disinformation or to facilitate cybercrime.

Overall, it is important to consider the potential risks and unintended consequences of AI when developing and deploying these systems, and to take steps to mitigate these risks through careful planning and oversight.

Not bad, eh?

(I’m back, it’s me again)

Obviously anyone with a brain cell could tell that the above was not written by me — the style and tone was totally different. If I was less lazy, though, I would have re-written it in my own style. The content is GOOD. The information is accurate. Those ARE a bunch of very real risks, and downsides.

I did this to kinda prove (or disprove) a point: AI is not about to totally replace content creators (or most people’s) jobs overnight. It’s a tool, it’s very rough around the edges, and it has a long way to go before it actually displaces most people’s jobs.

Give it 5-10 years. Is that a long time? To replace 75% of human’s jobs?

I don’t think that’s the right question to be asking.

Is that enough time, for us to figure out how to adjust to our new normal? To come up with new jobs, or UBI (universal basic income), or some new paradigm where money doesn’t even matter?

I doubt it.

I suspect we will, in the not too distant future, go through a period of immense disruption.

It has already begun.

This is scary, but it is also exciting.

There are an infinite amount of opportunities that are opening up.

People who had zero coding experience can now make their own apps and pieces of software.

People with great movie ideas but no writing experience, can now begin to put together a potentially excellent script.

People with vivid imaginations but no artistic experience, can now create visually stunning images, worlds.

People with poor writing abilities can now communicate clearly and professionally.

People without the money to hire a personal assistant can now get a chatbot virtual assistant.

People who don’t speak the same language can already communicate with one another.

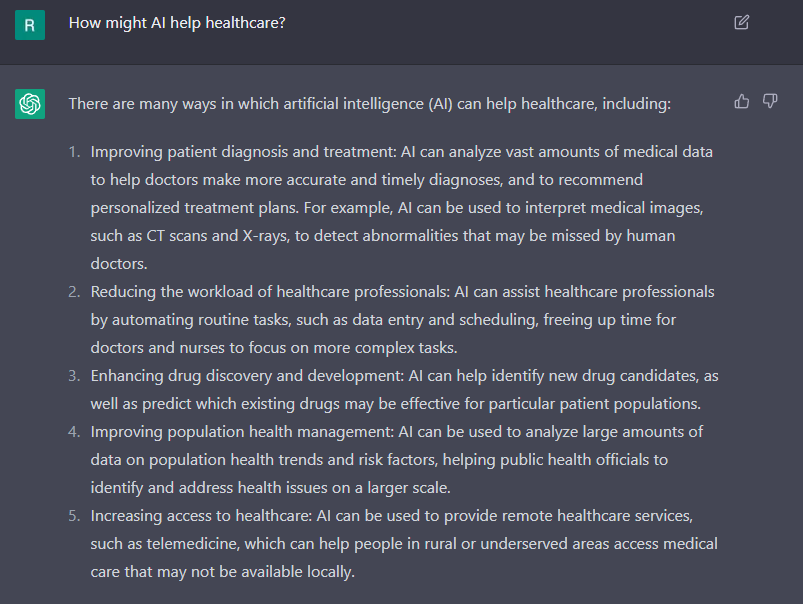

Healthcare will be improved:

The list goes on.

So, what should we do?

The future is more unpredictable than it has ever been. I can’t stress enough that I barely scratched the surface in this Letter; there is so much more happening in this space.

When our perception of reality is being challenged, when what it means to be dead or alive is up for debate, and when jobs that people have trained for 20 years at can now be done by a 15 year old with an internet connection.. massive disruption is inevitable.

I think one of the largest threats is the lack of purpose and meaning that many people are soon going to experience. The job displacement on such a mass scale will be scary.

Yes, new jobs will be created. But it will take time for those to eventuate, and for people to learn how to do them. Many jobs will never be replaced.

Long term, this is an incredibly exciting time. AI technology will allow us to do things literally impossible without it: cure diseases thought incurable, travel to distant planets, speak to the dead. It will free up so much collective time that humanity has thus far spent on menial jobs simply to survive, that it has the potential to usher in a Utopia.

Dystopia is also possible.

Maybe both are inevitable.

Who knows.

This tweet by Sam Altman, the CEO of OpenAI sits well with me and sums it up nicely:

Adaptability and Resilience.

If you’re interested in sponsoring this Newsletter, please email sponsorships@zenacademy.com.

Disclaimer: The content covered in this newsletter is not to be considered as investment advice. I’m not a financial adviser. These are only my own opinions and ideas. You should always consult with a professional/licensed financial adviser before trading or investing in any cryptocurrency related product.

Fantastic article! Great presentation on the state of AI- had no doubt you would deliver! Thank you!

Great article! I hope others realize the vast potential of AI! It’s so exciting to be a part of it